rats & children

simulation-based sequencer

Reflection:

A few days after the presentation, there are a few things I want to highlight about this project. For a very long time, I’ve wanted to take on technically challenging tasks, and I’ve aimed for my designs to reflect complexity and structure. At the same time, I’ve been meaning to go back to the root and create something meaningful — something that conveys how I see the world and perhaps allows me, through emotion, to experience a reality different from my own.

What makes me happiest about this sequencer probably has nothing to do with the implementation itself. Instead, it’s about reconnecting with the very thing that inspired me to work with technology in the first place: expression.

I have nothing specific to say about Rats & Children — instead, I want to thank Kunwoo and Andrew for creating this space and for motivating me to reconnect with a more creative part of myself that I deeply love. Cheers!

code can be found here

Periphery

Inspired by Mark Weiser's concept of calm technology and Pedro Sodre's idea of contemplative software, I created a tool designed to promote calm and mindfulness. Periphery helps users combat "screen apnea" while working at the computer and provides a gentle way to reconnect with their body after a long day.

The idea is that Periphery operates in the background or alongside whatever the user is working on—it should not demand too much attention. The current version offers two options for auditory feedback to mark inhale/exhale triggers, as well as four ambient sound options: Drone, Forest, Café, and Brown Noise.

Periphery running in the background: writing a simple p5.js sketch

Periphery running alongside: analyzing EEG data in Brainstorm and MATLAB

code can be found here

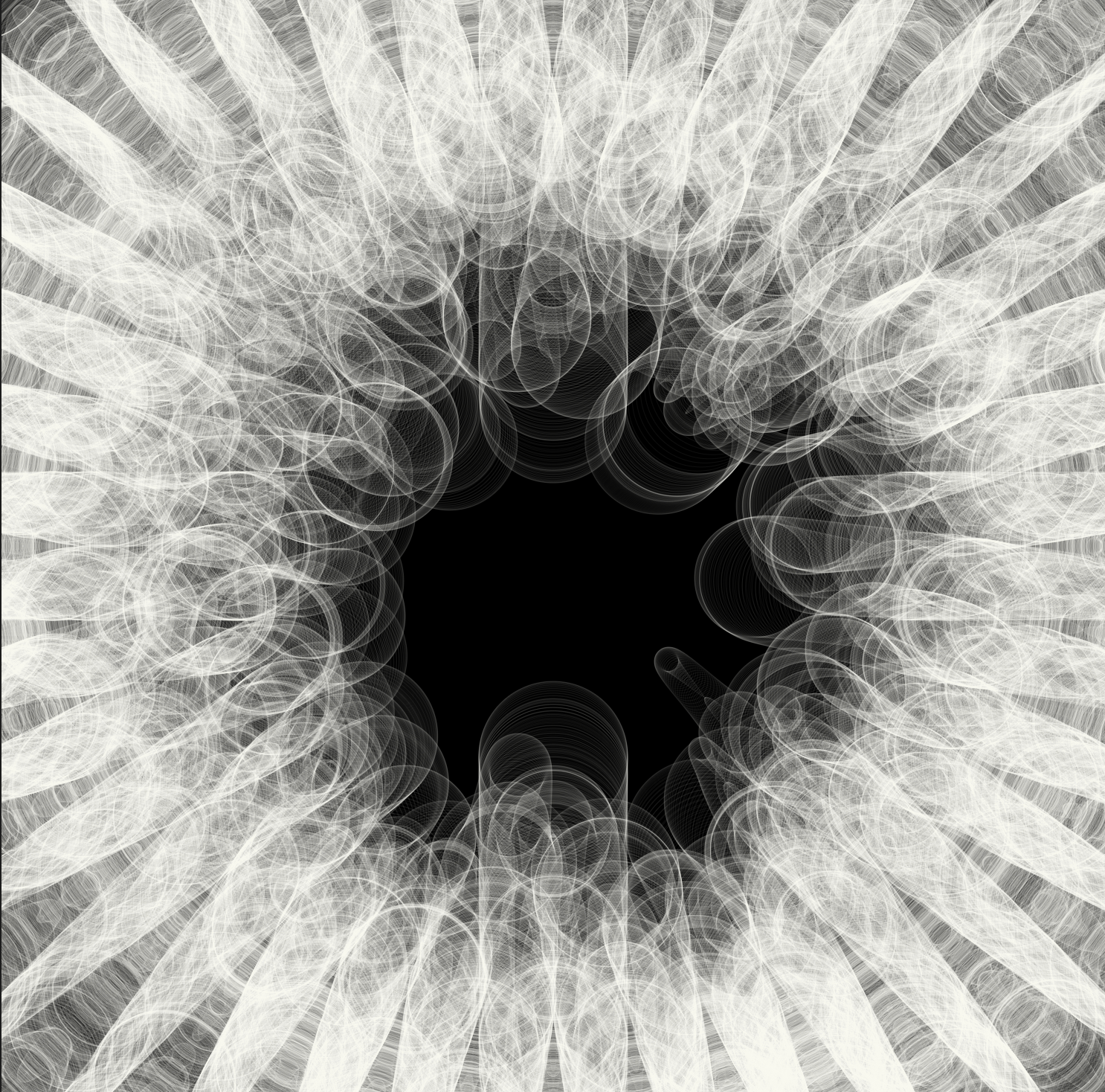

Sacred Vis

Sacred Vis is a psychedelic visualizer and instrument that encourages users to explore the nature of sound by interacting with the computer’s microphone. Although simple, its design allows for dynamic shapes and patterns, making the experience unique with every timbre and pulse.

The process of creating Sacred Vis involved hours of debugging ChuGL, often with complete uncertainty about the direction it was heading. Since I was exploring a new tool (programming language), I allowed myself the freedom to experiment with its strengths and weaknesses before forming a clear vision of what I wanted to create. Overall, the experience was very satisfying, and I now feel confident embarking on new projects with ChuGL as a valuable ally.

Difficulties: The main challenge has been properly recording the screen. Although it sounds simple, the quality drops significantly with every software solution I've tried. I believe this is due to the thin lines in the visualizations, but there must be a better way! On the audio side, I encountered some difficulties implementing more complex synthesis techniques. For example, when using Lisa for granular synthesis, my graphics window stopped working. I've already informed Andrew about this and will investigate further soon.

Thank you so much to the teaching team! Your examples have made a huge difference, but most importantly, it's inspiring to share work with people who genuinely care—it makes putting in the hours much easier.

The code can be found here

artful design - manifesto

listen to this piece while reading this reading response:

In a world saturated with sensory input, slowing down abruptly can feel daunting. This piece explores the concept of using a low-pass filter in music to create the effect of sound coming from another room. By doing so, the stimuli demand less direct attention while still occupying the periphery of awareness. I’ve been increasingly fascinated by Mark Weiser’s notion of calm technology and the idea that good design exists in the periphery of attention. Perhaps granting agency over how much presence a stimulus has in our awareness could be a valuable step in the pursuit of calm.

Design is the embodied conscience of technology — the "why" behind the "what" and "how." In a world fragmented by echo chambers and radical individualism, design carries the weight of responsibility. For me, creating technology is about one simple yet profound goal: removing friction from the human journey of self-discovery. It’s about fostering awareness of our role in this world (and perhaps others) as we seek balance, beauty, and compassion. When guided by thoughtful design, technology can embody freedom, empathy, and expression, paving the way toward eudaemonia.

greatest hits

“What purpose does the transformation into a zipper serve? Seemingly NONE, but it’s also what makes the thing awesome”

'Nothing is going to be as good at being a cello—other than a cello”.

“Use the computer as an agent of transformation”

“Interfaces should extend us (and not replace us)”

“What we make, makes us”

Social Design

Social design, at its core, grapples with one of the toughest challenges in the human experience: how to meaningfully account for diverse perspectives and needs without simplifying or erasing them. It’s an alluring goal, but often a flawed one, as the very nature of social design can unintentionally flatten the complexity of human interactions in its attempt to be universally accessible or inclusive. By creating frameworks for collective participation, social design risks imposing invisible structures on our behavior, nudging us toward predetermined paths and assuming uniformity in our responses. This approach can undermine the very individuality it seeks to preserve, shaping spaces that may feel more like controlled environments than genuinely open ones. While intended to foster connection, social design can inadvertently streamline our interactions to fit into neat, predictable patterns, reducing the richness of social encounters to something convenient and consumable. In reality, true plurality is messy and difficult, defying neat categorization or total accessibility, and the push to design "for everyone" can sometimes dilute the authentic, complex dynamics that naturally arise when individuals come together.

Experiment

Barbara’s Pauline Oliveros-inspired meditation was something else. I felt so reliefed and connected not only to the people ofthe class but to myslef — it was truly beatiful. I got home and wanted to work on something that could, in a modest way, campture some of the voices that I played with during the meditation.

Programmability & Sound Design

*** listen to this piece while reading this reading response: This is the first algorithmic piece of music I ever created; it marks the exact moment when I fell in love with computer-based music.

A Short Story

The year was 2018. I had just arrived at Berklee College of Music without a clear sense of what I wanted to study. What I did know was what I didn’t want: music. For me, making music was too precious and personal to turn into a profession. The idea of becoming proficient on my instrument and composing music for a living made me deeply skeptical. “So what are you doing here?” many people asked. My answer was always the same: Brian Eno. I was fascinated by the idea of creating a set of instructions that could generate music—music that could do things I wouldn’t, music that could teach me something about reality. It wasn’t a reflection of me, but a dialogue with me.

There was a lot of uncertainty in this journey. At one point, I even considered dropping out of music school to study computer science and transcendental meditation. But in the end, I stayed. I found a group of people who worked with strange-looking circuits and called themselves computer musicians, and eventually, I became one of them. The notion of programmability has not only shaped my relationship with art, but with many aspects of my life—it has made me appreciate the inner mechanisms of reality and how well-designed it truly is.

The first Max patch I created generated endless combinations of chords, inspired by the way my roommate used to play piano when we lived in Boston.

I resonate deeply with the book for obvious reasons, but this particular chapter felt especially reassuring, as it echoed many thoughts I’ve had on my own journey as a creative technologist and computer musician. I recently read A Mathematician's Lament by Paul Lockhart, in which the author presents a compelling scenario: imagine music was an obligatory subject in school, with the primary focus on nomenclature—reading and writing music. Imagine music being taken very seriously—not only as the clearest indicator of intelligence but also the most essential skill for success in the ‘real world.’ Now, imagine you don’t actually get to listen to music until college, meaning many people would never hear it at all. Your entire relationship with music would be based on notation. This, Lockhart argues, is exactly the case for mathematics.

I believe Ada Lovelace’s concept of poetic science might offer an antidote to this mathematical crisis. For me, disciplines like creative coding and computer music are rooted in the possibility of fostering a more interactive and playful relationship between our raw perceptions and the abstract world. Through creativity, we artfully engage with a logical, math-based framework for understanding reality, ultimately bypassing the imposed ‘seriousness’ of a STEM-focused view of the world.

Automation as an Aesthetic Tool

Example of using chaos to modulate audio parameters from a synthesizer

Example of using chaos to modulate the position of the audio source

Example of using physical movement to compose electroacoustic music

Key Design Principles

Principle 4.6: Use the computer as an agent of transformation.

Excited about: Cellular AutomataPrinciple 4.8: Experiment to illogical extremes (and pull back according to taste).

Application: Juancho Lagartos IG Project – I'm challenging myself to write a song a day, and this will be very useful.Principle 4.11: There is an art to programming.

Question: How is this art evolving as we increasingly bypass programming languages and rely more on natural language?

research: sequencer

This collection showcases different approaches I’ve taken to sequencer design — all of them are my own, except for the Segregation Model:

Nav Mech Unity Agents: A visually driven system where agents navigate within a dynamic environment. Their movements respond to changing elements within a plane, demonstrating emergent behaviors. While not sound-based, this system explores how micro-behaviors can generate complex patterns and events, offering a model for agent-based simulations.

Schelling's Segregation Model: A visual representation of Schelling’s model, featuring agents as colored blocks that move based on their neighbors. Though not connected to sound, it highlights how simple rules can create larger patterns, suggesting possibilities for triggering events in other domains, such as audio.

VR Sonic Sketcher: A VR drawing app that transforms visual art into a musical interface. Users paint in a 3D space, with each brushstroke triggering and adjusting synth parameters, creating a seamless blend of visual and auditory elements. This immersive setup allows for simultaneous visual and musical composition.

Turntable Sequencer: A turntable-inspired interface where users position objects on rotating platforms, each acting as a sound trigger. Changing rotation speeds and object placements modifies the rhythm, giving users hands-on control of sequencing and timing, similar to a DJ's manipulation of a turntable but with added spatial interaction.

Lines&Dots: A sequencer that maps drawn lines to sonic parameters. Each line segment directly influences sound elements like pitch and amplitude, linking visual geometry to audio characteristics. This approach makes visual design a core part of musical composition, blending visual and auditory creativity.

sim.seq

For this project, I'm developing a multi-agent simulation centered around agents moving within a circular boundary using Brownian motion. Here’s how the system works:

Events

Agent Instantiation

Visual: The agent automatically starts moving around the circle, accompanied by a particle effect.

Sonic: The agent loops a distinctive voice sound.

Agent Exits the Circle

Visual: The agent gradually shrinks when it moves outside the circle.

Sonic: A dying sound effect plays, indicating the agent's decay.

Agent Collision

Visual: The colliding agents' colors invert momentarily, marking the interaction.

Sonic: A note from a pentatonic scale plays, adding a musical element to each collision.

Agent Enters a Special Zone

Visual: The agent enlarges and displays a particle effect to highlight the zone’s significance.

Sonic: The agent loops a louder, distinct shout sound, signaling entry.

Interactions

Clicking: Instantiates a new agent, adding it to the simulation.

Sliders: Control various audio-visual parameters, allowing adjustments to sound effects, agent behaviors, and visual elements.

Button: Restarts the entire simulation, resetting all agents and parameters to their initial states.

This setup blends visual and sonic feedback to create an engaging interactive experience, emphasizing the dynamic interactions between agents within a constrained environment.

Game Design

I grew up playing Free Rider 2, a simple bicycle game where you create your own track by drawing it. Though simple, this game was the first instance of me recognizing the computer as a tool for creativity — with endless, testable design options coming to life instantly! It’s for this reason that I deeply value incorporating principles from game design when creating for active participation, such as instrument building. I strongly believe that ‘playing’ is perhaps the purest way of accessing some of our more genuine interactions and behaviors that are often repressed or consciously ignored. Playing music, for example, can momentarily bypass the ego — there’s something truly magical in that experience. Here is my short list of principles that stood out to me this time:

6.7 — All games require interaction and active participation

Yes, but we get to decide the scale and magnitude of involvement. Some games actively explore passivity, while others do the opposite.

6.8 — All games are played in hyper-first person

Brian Eno has a fantastic quote explaining how art is a type of simulation — we get to experience what it’s like to live in a dystopian society while reading 1984, without actually renouncing our agency. Ge explores a similar idea with sci-fi, as a way to imagine "what ifs" before we can technically achieve them.

6.18 — Gamefulness and fun do not have to come at the expense of expressiveness

Free Rider 2 is a perfect example of how fun and gamefulness can emerge from the level of expressiveness allowed to the player.

6.20 — The Tofu Burger Principle

A fantastic concept! As a vegetarian, I often question the idea of replacing traditional animal-based dishes with plant-based equivalents. Instead, I find it more interesting to explore the unique possibilities of plant-based foods.

6.21 — Lowering inhibition for intended behavior by gamifying expressive experiences

Yes, we must reward self-expression and the willingness to push the limits of our designs!

Final Thought:

That Dragon, Cancer looks incredibly moving. It’s the kind of game that makes me want to delve deeper into games as an art form. I’ll be sure to ask my classmates for recommendations on artful games. It’s a medium I haven’t explored as much, but I’m eager to!

Interface Design

*** listen to this piece while reading this reading response: This piece is a ‘space’ where I go when I need to slow down.

Principles that I resonated with:

5.4 — Bodies matter.

5.5 — Have your machine learning and a human in the loop.

5.19 — Interfaces should extend us (and not replace us). We want tools, not oracles.

I'm feeling a bit stressed; there’s a lot to do, and I haven’t been able to do much besides work this weekend. Is there a way I could express these emotions without relying on natural language? Music can be a way, but not everyone can play/create music — it has a relatively steep learning curve. If interfaces are meant to extend us, there’s still a long way to go in translating our diverse, multidimensional expressions into and out of the computer.

Human-computer interaction fascinates me because it presents many challenges and opportunities: Can we share our physiological and psychological states with others? Or could these states influence how the computer interacts with us (affective computing)? Will large language models replace programming languages and reduce the need for many interfaces? Could brain-computer interfaces eventually replace screens? I don’t have answers to these questions, but I’m definitely interested in exploring them.

Bodies matter — As I got deeper into computer art/music and especially extended reality (XR), I became more familiar with the tools that shape immersive audiovisual experiences. But I often found the 'computer to platform' workflow clunky when designing for this medium.

I noticed three main issues:

2D screens don’t really capture the physicality of the person using them, creating a gap between the simulation and the actual experience.

You can’t easily make changes when you’re inside these spatial experiences, which keeps a frustrating distance between creating and experiencing.

The workflow doesn’t fully align with accessible tech principles because most physical inputs are still limited to just using your hands.

By including the body itself as an interface, we can bridge this gap, making the design of 3D and immersive experiences feel more natural and intuitive. When the body is part of the interaction, the distinction between creating and experiencing blurs, allowing for more fluid and engaging environments

Have the human in the loop — This point is especially important because it directly impacts our collective physical and mental health. The attention economy created a race to the brainstem, deeply affecting the reward systems of an entire generation. Now, with high-fidelity generative agents, many argue that the race has shifted to intimacy — with products like Replika and other chat platforms where users develop romantic relationships with AI.

Beyond the sociocultural implications of these trends and interfaces, the real concern lies in the pace of AI's advancement compared to the much slower evolutionary pace of human biology. This is a clear example of design not keeping humans in the loop.

I do fear the consequences. Our designs need to take pace into account.

Interfaces should extend us (and not replace us) — a musical instrument doesn’t replace the musician; it amplifies their creativity and expression. Similarly, a good interface should enhance our ability to learn, create, and communicate without removing the human element. It should provide intuitive access to complex systems, making it easier for us to navigate information, connect with others, or explore creative possibilities.

Visual Design

*** listen to this piece while reading this reading response: it’s a paul-stretched version of David Bowie’s “Sound and Vision”

In a world dominated by dichotomies, the concept of multimodality can be seen as subversive.

Growing up, I recall having to choose between music or art class at school—these subjects were considered so distinct that it was rare to find someone who both made music and painted. In the framework of artful design, however, interactions are crafted through the interplay of different sensory grammars. When combined, they create a powerful multi-sensory/multimodal experience.

Sound as a Lingua Franca:

For many years, I’ve identified as an auditory learner—not because I inherently learn better through listening, but because my understanding of sound often translates into other disciplines. For instance,

- when learning about animation, I wondered if there was an equivalent to delay in digital signal processing, and the answer was a trailblazer

- when learning about the visual cortex, I wondered if there was an equivalent to tonotopic mapping in the cochlea, and the answer was retinotopy

The instances where direct mapping exists between different senses provide clear evidence of how valuable it can be to design thinking in multimodal terms.

Easing Functions

This chapter felt particularly relevant to me, not only because I’m interested in visual design, but also because easing motion was a key aspect of my work as a 'game feel' developer. My role, alongside audio-haptic design, involved ensuring that every object moving in VR had a smooth ease function — and after reading this, there’s a lot I wish I had known!

Humans can easily detect the unnaturalness of linear movement, which often breaks immersion. As designers, it’s our responsibility to bring life into our animated or simulated objects, and giving them personality through motion is a great start. A few days ago, I read an article called The Role of Emotion in Believable Agents, where the author states, 'motion brings an emotional undercurrent to action.' If that’s true, then easing functions could be seen as an emotional grammar that I’m just beginning to discover.

Talking about easing functions

Echoing Ge’s love for spiral-like shapes, I decided to make a simple sketch in p5.js that uses some of these ease functions and follows the following design principles:

3.2 → Animate!

3.4 → Simple form!

3.5 → Build complexity from simplicity!

Also inspired by Kunwoo’s example of circles that feel like rain, this sketch is based on a very simple shape that starts looking interesting once many layers are instantiated.

Designing Expressive Tools

*** listen to this piece while reading this reading response: the music was created with garage band instruments exclusively

I was 8 years old when Apple launched the first iPhone. Skeptics argued that like many things designed by Steve Jobs, the iPhone—and apps like Sonic Lighter—were just self-indulgent takes on tool-making. Today, the iPhone is one of the best-selling phones in the world, and it’s the very ethos behind apps like Sonic Lighter that has made this technology so appealing.

Fun, inclusive apps like Sonic Lighter, Ocarina, and Smule Sing! allowed many people from my generation to engage with technology in ways that weren’t limited to the 'technically gifted.' These apps removed the unnecessary friction we often feel when learning new technologies, making it easier to experiment with software and music in socially stimulating ways.

The nature of capitalism has enabled us to achieve great things as a species, but it has also made us highly goal-oriented, often overly preoccupied with how our actions will benefit us in the future. In contrast, the philosophy of artful design offers a liberating perspective by evaluating the role of science fiction in our technological evolution:

'Science fiction and design have something in common: at their core, they aren't really about science or technology, but about the questions humanity doesn’t yet have answers to—or even know to ask.'

This mentality allows us to explore without being bound by categorization or practicality - whether it is in the context of making software, music, or art! Making allows us to venture into unexplored territory where no formal framework exists to 'index reality.' Instead, we are encouraged to adopt low-floor, high-ceiling practices that invite more explorers to interact with this ethereal new domain. Following this logic, we see how many new technologies, such as generative agents and virtual reality, are first tested in the gaming world before being used in more ‘serious’ applications.

“Music = Power to Change Society”

Resonance: This chapter has particularly resonated with some of the questions I've had since arriving at Stanford. Sometimes, I struggle with how to justify—both to myself and others—spending hours 'playfully' exploring art in such a complicated world. Many of my friends are conducting cancer-related research, fixing genes, or studying to become doctors, while my practice seems unrelated to those pursuits. However, the book has made me feel a bit less alone in my search for meaning. It’s about making the beauty in things more apparent and potentially bringing calm through technology.

Principle 2.6: Technology should create calm.

This made me realize that many of the things that bring me calm were often created without that specific intention—just for the sake of doing them, without much ambition. These things only made sense once I was able to view them from a different perspective.

Talking about different perspectives: An Example

My Ecuadorian friend Thomas and I have been working on illustrating the idea that it’s often difficult to understand how a particular event will unfold while it’s happening. We tend to see things from a single dimension, when in reality, they are not only multidimensional but also connected in ways we could never imagine. Thomas asked me to create an illustration where a point (an event), when viewed from a different axis, reveals itself as a line, then a plane, and eventually a cube, and so on.

Perhaps this acknowledges that many of the things we do without apparent reason end up connecting us to people and events in unexpected ways. I think that's part of the magic—our perception of time as a linear progression allows us to be surprised when we later realize that the 'line' was actually something more conected, like a hypercube. In a way, this realization brings me a sense of calm.

One final thought

I particularly enjoyed the interview where Ge was asked the serious question, 'Will computer-based instruments someday replace traditional instruments?' I believe most artists who work with technology realize that the most interesting pursuits are often not about replicating reality but about using the unique affordances of a specific medium to create something that otherwise couldn’t exist.

As a virtual reality designer, I draw inspiration from nature, but I would never claim that the goal of working in VR is to replace it. On the contrary, working with this kind of technology has made me more aware of the beauty and elegance of the material world. As Ge aptly put it, 'Nothing is going to be as good at being a cello—other than a cello”.

Artful Design: Design is ____

“What purpose does the transformation into a zipper serve? Seemingly NONE, but it’s also what makes the thing awesome”

1 idea: In a world fueled by automation, fast cars, and fast food, the simple act of slowing down or doing something without expecting anything in return becomes a subversive one. The philosophy of artful design offers a practical yet beautiful framework for contemplating the sublime, enabling a deeper appreciation of both the design of reality as a whole and its individual components. This lens, while useful, encourages us to perceive objects as ends — valuing them for the aesthetic experiences they provide, rather than just their pragmatic functions.

Artful design takes on a Spinoza-like approach, fostering a more symbiotic relationship between the observer and the world. Through interaction, the architectural complexities of nature guide our processes of creation.

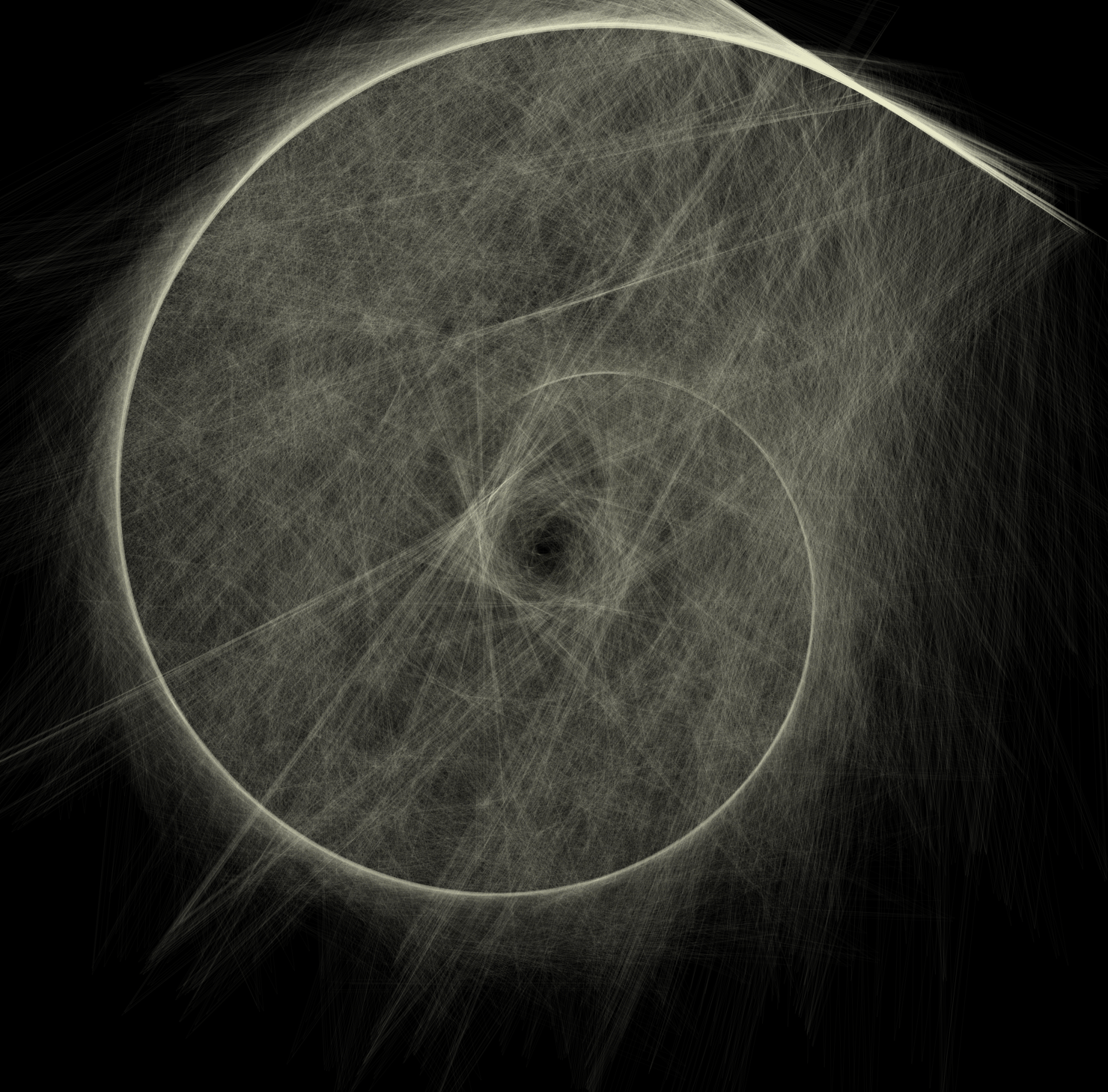

Lorenz Attractor — a.k.a the butterfly effect

Greg Dunn’s neuro art

2 Questions:

If we approach the world through the lens of artful design, akin to Spinoza's symbiotic relationship between observer and nature, how might this philosophy change the way we create, consume, and experience the environments we inhabit?

How does viewing objects as ends in themselves, rather than as mere tools for pragmatic purposes, reshape our relationship with the world around us? Can this shift in perspective influence how we design and interact with technology?

— maybe, as a designer and technologist, this comes with a responsibility…

3 Principles that grabbed my attention:

Principle 1.9: "Design is Addition — when intentionality exceeds methodology, we invent." —> The act of instantiation can feel like momentarily accessing the Platonic World, seeking the immaterial to create an instance of it in the material world. Through the philosophy of artful design, one becomes susceptible to such metaphysical quests, guided by a framework that facilitates this 'extraction' or instantiation.

Meta Principle 1.4: "Design is both precise and tacit, embodying aspects of engineering and something more akin to art. Present but invisible." —> Good design is seamless — it’s everywhere but it doesn’t always need acknowledgment. I think good design removes all the unnecessary friction in our interaction with objects.

Principle 1.11: "Design is constraints — which give rise to interactions, and, in turn, aesthetics." —> It feels a bit like carving or subtractive synthesis, its shape is given by cutting what we don’t want.

Talking about constraints: An Example

A few weeks ago, I started recording an album after almost five years away from it. For this project, I enlisted two of my favorite people in the world to help me co-produce a seven-song LP. However, there was a catch — the three of us are producers who are used to working alone. I was curious to see how the studio dynamics would unfold, but time was limited, so I needed to ensure we were all on the same page.

Long story short, I began developing a desktop program that combines the I Ching, Alan Watts' teachings, Rick Rubin's The Creative Act, and Brian Eno’s Oblique Strategies into a single program — we called it 'Studio Oracle’.

program was created in p5.js and can be accessed here

the program assigned us roles at the start of every session + it provided a couple of suggestions for the overall direction of that particular session.

Some roles included;

the mediator

the critical

the receptive

Some session strategies included:

use an old idea in a new way

imagine different contexts for your work and how these changes affect the outcome

listen to your project at different volumes to find new perspectives

In the end, incorporating these limitations into our dynamic introduced an interesting and perhaps unique way of interacting in the studio. We all knew our roles for each session, and having ‘strict’ predefined roles actually allowed us to be more playful in other creative aspects.

So, to answer the question: design is everything, and artful design is a thoughtful way of engaging with and contemplating the reality around and within us.

Design Etude

Uman Drawing made by a very dear friend

My child, Robert Plant

My Ecuadorian/Andean notebook

Means & Ends

Uman: Beyond its emotional quality, the Uman is a surreal extraterrestrial being, grounded in love and flow—it's very lovable. Its colors and circular shape evoke an ethereal vibe. I find it beautiful because it provides me with companionship and inspires me to flow with ease, without resistance. I believe this drawing is an end in itself—it doesn’t serve any functional purpose other than prompting me to pause and appreciate it. In that sense, it could serve the role of triggering my awareness, a form of mindfulness. Maybe all art serves this purpose.

Plant: This is the only other living being in my studio and my only responsibility besides myself at the moment. Functionally, we have a somewhat transactional relationship—I provide it with water and light, and in return, it gives me the opportunity to practice selflessness. As for its form, I appreciate how it appears to be made of knots, how its color changes with humidity, and how its slow movement serves as a constant reminder of patience.

Notebook: This is perhaps the only object with an obvious functional purpose—it allows me to write! However, in a way, I feel like this notebook chose me. I was visiting a girl I was dating at her job in a folkloric fashion store in Quito when the notebook caught my attention. Among several options, the geometric design of this one resonated with me the most. I use it almost every day and love its texture, its yellowish pages, and, of course, how the cover looks.

Guerilla Design

Since arriving at Stanford, I’ve used my bike more than I had in the past few years. When I was younger, I was a downhill racer, spending most of my time traveling through the mountains of Ecuador to race and discover beautiful trails. Because of this, I have a very personal connection with bicycles, and I wanted to create something that could add an extra dimension to my riding experience.

some images from my downhill days

I developed a simulated engine in Unity that uses GPS data from my phone to calculate my current speed. This value modulates the amplitude and pitch of several looping audio sources playing engine sounds.

This is a prototype of what that experience looks and sounds like. The speed calculation algorithm still needs improvement, but this project has sparked my interest in exploring auditory feedback in my non-digital interactions even further!

using UnityEngine;

using TMPro;

using System.Collections;

public class EngineController : MonoBehaviour

{

public AudioSource[] engineSounds; public TMP_Text speedText;

[Range(0.1f, 5.0f)] public float basePitch = 0.5f;

[Range(1.0f, 5.0f)] public float maxPitch = 3.0f;

public float maxSpeed = 10f;

[Range(0.01f, 0.1f)] public float speedSmoothing = 0.01f;

public float gearShiftDelay = 0.05f; public float minVolume = 0.1f;

private Vector2 lastPosition; private float smoothedSpeed = 0f; private float previousSpeed = 0f; private bool firstPositionUpdate = true;

private AnimationCurve speedToPitchCurve = new AnimationCurve(new Keyframe(0, 0.5f), new Keyframe(10, 3.0f));

void Start()

{

if (!Input.location.isEnabledByUser)return;

if (engineSounds == null || engineSounds.Length == 0) return;

if (speedText == null)return;

StartCoroutine(StartLocationService());

}

void Update()

{

if (engineSounds == null || engineSounds.Length == 0 || speedText == null)return;

UpdateEngineSounds(smoothedSpeed);

}

private void UpdateEngineSounds(float speed)

{

float clampedSpeed = Mathf.Clamp(speed, 0, maxSpeed);

float pitch = speedToPitchCurve.Evaluate(clampedSpeed);

foreach (AudioSource engineSound in engineSounds)

{

if (engineSound != null)

{

engineSound.pitch = pitch;

engineSound.volume = Mathf.Lerp(minVolume, 1.0f, clampedSpeed / maxSpeed);

}

}

SimulateGearShifts(clampedSpeed);

}

private void SimulateGearShifts(float speed)

{

float gearChangeSpeedThreshold = 3f;

int currentGear = Mathf.FloorToInt(speed / gearChangeSpeedThreshold);

if (currentGear > Mathf.FloorToInt(smoothedSpeed / gearChangeSpeedThreshold))StartCoroutine(GearShiftCoroutine(gearShiftDelay));

}

private IEnumerator GearShiftCoroutine(float delay)

{

foreach (AudioSource engineSound in engineSounds)

{

if (engineSound != null)engineSound.pitch = Mathf.Clamp(engineSound.pitch - 0.2f, basePitch, maxPitch);

}

yield return new WaitForSeconds(delay);

}

private IEnumerator StartLocationService()

{

Input.location.Start(0.5f, 0.5f);

int maxWait = 20;

while (Input.location.status == LocationServiceStatus.Initializing && maxWait > 0)

{

yield return new WaitForSeconds(0.1f);

maxWait--;

}

if (Input.location.status == LocationServiceStatus.Failed || maxWait < 1)

{

Debug.LogWarning("Location service initialization failed or timed out.");

yield break;

}

while (true)

{

UpdateSpeedFromLocation();

yield return new WaitForSeconds(0.05f);

}

}

private void UpdateSpeedFromLocation()

{

if (firstPositionUpdate)

{

lastPosition = GetLocationPosition();

firstPositionUpdate = false;

return;

}

Vector2 currentPosition = GetLocationPosition();

float distance = Vector2.Distance(lastPosition, currentPosition) * 1000f;

float speedInMS = distance / Time.deltaTime;

SmoothSpeed(speedInMS);

UpdateSpeedText(smoothedSpeed);

lastPosition = currentPosition;

}

private Vector2 GetLocationPosition(){return new Vector2(Input.location.lastData.latitude, Input.location.lastData.longitude);}

private void SmoothSpeed(float newSpeed)

{

float alpha = speedSmoothing;

smoothedSpeed = alpha * newSpeed + (1 - alpha) * previousSpeed;

previousSpeed = smoothedSpeed;

}

private void UpdateSpeedText(float speedInMS)

{

float speedInKmH = Mathf.Clamp(speedInMS * 3.6f, 0, maxSpeed);

if (speedText != null)speedText.text = $"Speed: {speedInKmH:F2} km/h";

}

}

Getting Started with ChucK

// Mateo Larrea - Stanford University

// Step Gen, Zero-crossing detector, Low-pass filter, Delay, Reverb, and dac setup

// Audio chain setup for four separate step generators

Step s1 => ZeroX z1 => LPF lpf1 => ExpDelay delay1 => JCRev reverb1 => dac;

Step s2 => ZeroX z2 => LPF lpf2 => ExpDelay delay2 => JCRev reverb2 => dac;

Step s3 => ZeroX z3 => LPF lpf3 => ExpDelay delay3 => JCRev reverb3 => dac;

Step s4 => ZeroX z4 => LPF lpf4 => ExpDelay delay4 => JCRev reverb4 => dac;

// Low-pass filter cutoff frequencies (Hz)

5000 => lpf1.freq;

1000 => lpf2.freq;

2000 => lpf3.freq;

500 => lpf4.freq;

// Delay parameters for all delays

2::second => delay1.max => delay2.max => delay3.max => delay4.max;

2::second => delay1.delay => delay2.delay => delay3.delay => delay4.delay;

1.0 => delay1.durcurve => delay2.durcurve => delay3.durcurve => delay4.durcurve;

1.0 => delay1.ampcurve => delay2.ampcurve => delay3.ampcurve => delay4.ampcurve;

10 => delay1.reps => delay2.reps => delay3.reps => delay4.reps;

// Reverb wet/dry mix ratio

0.9 => reverb1.mix;

0.5 => reverb2.mix => reverb3.mix => reverb4.mix;

// Initialize step values with random starting points

Math.random2f(-1.0, 1.0) => float v1;

Math.random2f(-1.0, 1.0) => float v2;

Math.random2f(-1.0, 1.0) => float v3;

Math.random2f(-1.0, 1.0) => float v4;

// Spork individual step generator routines with randomized timing

spork ~ play_step(s1, v1, 200::ms, 150::ms); // s1 with base interval and randomness

spork ~ play_step(s2, v2, 300::ms, 100::ms); // s2 with base interval and randomness

spork ~ play_step(s3, v3, 200::ms, 50::ms); // s3 with base interval and randomness

spork ~ play_step(s4, v4, 100::ms, 10::ms); // s4 with base interval and randomness

// Advance time indefinitely

while( true ) 1::second => now;

// Function to control the step generator with random intervals

fun void play_step(Step s, float v, dur baseInterval, dur randomness)

{

while( true )

{

// Set the step value randomly

Math.random2f(-1.0, 1.0) => v;

v => s.next;

// Alternate the step value

-v => v;

// Advance time with randomness added to the base interval

(baseInterval + Math.random2f(-1.0, 1.0) * randomness) => now;

}

}

Thoughts

In my ChucK implementation, I combined several examples that I liked into a single script. I began with the Step Generator and added multiple voices with randomized trigger times to create polyrhythmic patterns. After that, I focused on effects — specifically delay, reverb, and a low-pass filter.

This exercise helped me better understand the functionality of ‘spork’ and allowed me to start viewing ChucK as a text-based modular synthesizer. I found the process very enjoyable!

The result is quite interesting; it almost feels like coins or marbles colliding with a surface. The fade-in/out effect was added in Twisted Wave during post-processing.

Improvements

I want to become more familiar with Low-Frequency Oscillators (LFOs) to dynamically modulate the cutoff frequency of the Low-Pass Filter.